In Part 1, we argued that accounting is not math. It's trust, formalized. That distinction reframes accounting from numerical computation to structural verification. One of our readers, initially grouped our insight with a broader trend, suggesting that the idea wasn't entirely novel.

But after a structured rebuttal, even they acknowledged:

“This level of rigor in pre-authorization… is not a widely established industry standard.”

That evolution matters. It confirms what we've been saying from the start:

The novelty isn’t in having AI agents. It’s in governing them.

Let’s address a likely assumption up front:

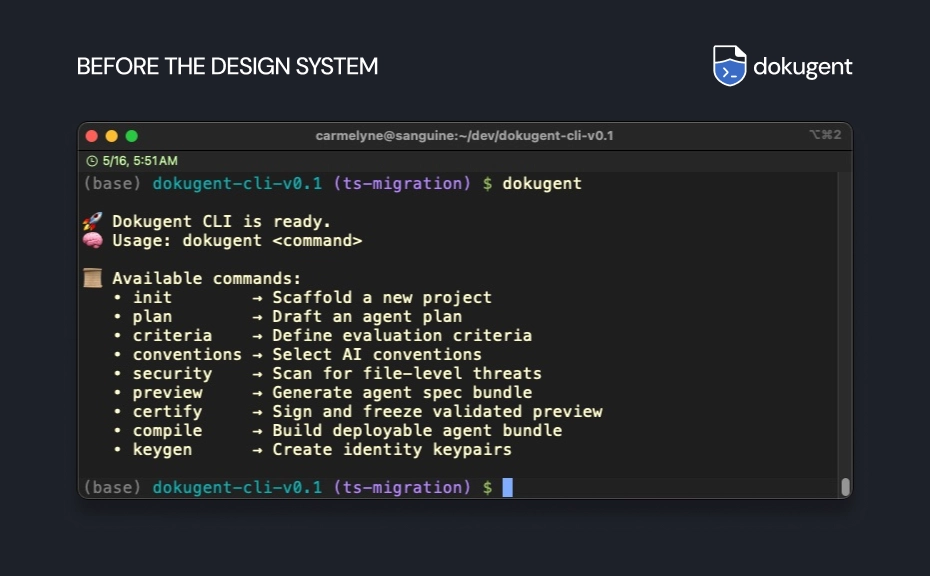

You might think Dokugent is just another agent framework—a CLI-based tool for building automated workflows.

But it isn’t.

Dokugent doesn’t focus on what agents can do. It focuses on what they should be allowed to do — and under whose authority.

This changes the system-level question from:

“Can this agent execute a task?” to “Who authorized this task, and under what conditions?”

Most modern AI tooling prioritizes capability and logs what happened after the fact. Dokugent reverses that. It prioritizes pre-authorization, traceability, and verifiable delegation—before the agent ever acts.

Even without direct advisory boards, Dokugent’s roadmap aligns with high-level concerns voiced by AI leaders. We’ve systematically mapped public statements by figures like Satya Nadella, Sundar Pichai, Yann LeCun, and others to actionable gaps in agent infrastructure.

Nadella’s call for agent auditability, Pichai’s emphasis on compliance, and Altman’s focus on SDK trust layers directly echo the foundations of Dokugent’s design. Our certify, simulate, and trace commands didn’t arise in isolation—they’re direct responses to industry pain points articulated at the highest levels.

By mapping recurring themes from public leadership statements to systemic infrastructure gaps, Dokugent offers early responses to billion-dollar concerns—without waiting for permission or endorsement.

In accounting, every journal entry links back to a person, a time, and a reason. Accountability is built into the medium. The numbers tell a story—not just of what changed, but who changed it, why it happened, and whether it holds up to scrutiny.

That mindset scales.

This is more than a metaphor. Here's a practical translation of accounting mechanisms into agentic execution models.

| Accounting Term | Dokugent Parallel | Purpose |

| Journal Entry | plan file | Declare intention |

| Audit Trail | trace logs | Record every step |

| Reconciliation | simulate + dryrun | Test without real-world impact |

| Certification | certify | Prove authorized review |

| Ledger Reference | doku:// URIs | Link to historical context |

This isn’t metaphor. It’s design inheritance. Just like accountants need structured records to defend financial actions, AI systems need structured context to defend autonomous decisions.

By bringing the logic of accounting into agentic AI, Dokugent provides:

-

Agency with safeguards Plans aren’t just task lists—they’re declarations of accountability. Every agent begins with an explicit, reviewable plan that defines its scope and limits.

-

Trust that travels with the task Once reviewed and certified, a plan becomes more than a file—it’s a portable, cryptographically signed artifact. Wherever it moves, trust moves with it.

-

Built for audit, not obstruction Dokugent’s structure invites transparency. You can inspect any plan like a ledger, not to slow it down, but to ensure its legitimacy holds across time and teams.

This is how you build AI systems that can operate in real-world, high-stakes environments. Where every action must answer the question: who authorized this, and under what constraints?

Accounting showed us how to encode trust across time. Dokugent just extends that architecture to intelligent systems.

That's why Dokugent isn’t metaphor. It’s design inheritance. Dokugent generalizes the structural mindset of accounting to any system where agents act.

Let’s preempt some common assumptions:

That’s a fair question. But no—Dokugent doesn’t just chain tasks. It enforces a plan-review-certify chain that must be cryptographically acknowledged before execution. This creates an immutable record of intent and consent.

In tooling, yes. In philosophy, no. DevOps focuses on reliability. Dokugent focuses on responsibility.

Some do parts. Most are either internal mini-agent tools or opaque dashboards. Dokugent is:

- CLI-first

- Markdown-native

- Cryptographically signed

- Designed for agent-native developer governance

Dokugent may have been inspired by accounting, but the principles behind it—structured intent, traceable delegation, and verifiable consent—apply far beyond finance.

In fact, they’re especially relevant in domains where the cost of AI mistakes is high, and the appetite for change is low. These are sectors with legacy systems, regulatory pressure, and deeply embedded cultural habits. Ironically, those are the exact conditions where agentic governance isn’t just useful—it’s necessary.

-

Education — Who approved the AI lesson plan? When students are exposed to AI-generated teaching materials, we need systems that can prove what was taught, why it was chosen, and who authorized the content. In schools, trust isn’t optional—it’s foundational.

-

Healthcare — Who authorized that treatment suggestion? Clinical decisions made by or with the help of AI need pre-cleared protocols and auditable trails. It’s not enough to say “the model suggested it.” Lives depend on verifiable review and sign-off.

-

Governance — Who reviewed that policy draft summary? When AI summarizes laws or drafts policy memos, transparency about inputs, framing, and delegation becomes a civic requirement. Dokugent offers a model of agent transparency that aligns with democratic values.

-

Software Development — Who signed off on the codegen plan? AI can now write and merge code. But when systems go down, who’s responsible? Dokugent embeds review and accountability into each step of the agent’s lifecycle, closing the gap between generation and governance.

-

Finance — Who forecasted the risk profile and when? Markets run on trust. AI that makes predictions or allocates resources must carry an audit trail—one that doesn’t vanish with a server restart. Dokugent preserves context, authorship, and approval history for every financial move.

These aren’t just compliance checkboxes. These are institutions built on explicit trust. And as they adopt agentic systems, the absence of visible delegation mechanisms becomes a liability. Dokugent turns that liability into infrastructure.

Agentic AI challenges us to rethink power—not in terms of capability, but in terms of accountability. It’s not enough to design agents that can act. We must also design the systems that govern how and under whose authority they act.

Too often, AI tooling focuses on what models can do. More accuracy, faster inference, broader coverage. But Dokugent asks a different question:

Who said the agent should do this—and under what terms?

You might assume capability alone signals progress. But in regulated environments, capability often needs to be paired with explicit control mechanisms. It’s the difference between a helpful assistant and an untraceable actor.

Governance reframes speed as responsibility. It provides the boundaries and context that make rapid execution not just possible—but reliable, traceable, and aligned with intent.

Dokugent enforces this by design:

-

Pre-authorized plans, not retroactive logs Plans must be reviewed and certified before execution. There’s no room for "oops" in high-stakes workflows.

-

Signed approvals, not silent defaults Every reviewer and certifier is cryptographically stamped into the agent’s file. Trust is explicit, not implied.

-

Traceable actions, not black-box guesses Post-action blame isn’t enough. Dokugent gives receipts before the agent moves.

For organizations in regulated industries, these features often become operational requirements—especially where mistakes can lead to compliance failures, financial loss, or real-world harm. Traceable delegation isn’t a conceptual ideal. It’s an operational necessity in systems where autonomy must remain accountable.

At first glance, Dokugent looks like a command-line tool. And it is.

But behind the CLI is a protocol mindset. A way of working where agents don’t just perform—they account for themselves. It may look like a utility, but it behaves like infrastructure.

Dokugent introduces:

-

Markdown-native plans Human-readable, version-controllable, and structured for collaboration. A compliance officer or product manager can draft a plan. A developer can review it. An agent can simulate it. Everyone sees the same file—clear, trackable, and ready for certifiable execution.

-

doku:// URIs These unique identifiers persist across versions and link every action back to its authorized plan. This creates a namespace for agent integrity.

-

Chain-of-custody records Every plan embeds authorship, review, and certification metadata. Nothing is left implicit.

Dokugent functions as both a CLI tool and a governance protocol—shaping how agent systems can be built with trust and traceability embedded from the start. Instead of designing around capability, we design around accountability.

Even if you never use Dokugent, you may still want your agents to behave like they did.

Because when agents are certified, they aren’t just processes—they become entities. Identity markers embedded in Dokugent’s schema allow agents to establish who they are, who they represent, and what authority they carry. This makes possible cryptographic handshakes, traceable interactions, and secure agent-to-agent communication—no mystery, no guesswork.

That’s the mark of a protocol: not just behavior, but verifiable identity and interoperable trust.

Prompt hacks and sandbox demos made early AI exciting—but not safe. In this new era of agentic AI, we need more than capability. We need infrastructure that knows what it’s doing, why it’s doing it, and who said it could.

That’s what Dokugent is trying to build: A system that makes delegation visible, auditable, and real. Not by inventing new forms of trust—but by reviving old ones. Accounting didn’t just track value—it tracked consent, responsibility, and consequence. Dokugent extends that lineage into code.

If you’re building systems meant to scale, make sure your accountability scales with them.

That’s why this series exists. To remind us that trust isn’t a feeling—it’s a system. And we can build it.

In Part 3, we explore how these principles become practical—how Dokugent’s CLI enables cryptographically signed delegation, human-in-the-loop review, and traceable agent behavior in real systems.